- gunshots

- slaps

- door slams

- a dog's short warning growl prior to bark

- stepping on a dry leaf

- slowly tearing a sheet of paper

- closing a door slowly

- an entire thunderclap

- Indoors (small enclosed area with a great deal of absorbency)

- outdoors (open unconfined area)

- a explosion will sound like a gunshot

- a voice will sound like the cartoon chipmunk character

- the roaring power?

- the loudness or the attack?

- the inordinately long sustain and decay?

The loudness of a sound depends on the intensity of the sound stimulus. A dynamite explosion is loader than that of a cap pistol because of the greater amount of air molecules the dynamite is capable of displacing. Loudness becomes meaningful only if we are able to compare it with something. The sound of a gunshot may be deafening in a small room, but actually go unnoticed if fired in a subway station when a train is roaring past. (A film that use this in the narrative is Sleepers - when a man is shot at the same time as an aircraft is landing) "Equal loudness" Humans are most sensitive to frequencies in the midrange (250 Hz - 5000 Hz) When two sounds, a bass sound and a middle range sound are played at the same decibel, the listener perceive the middle range sound to be louder. This is why a clap of thunder in a horror movie may contain something so unweatherlike as a woman's scream. Rhythm is a recurring sound that alternates between strong and weak elements. Wooden pegs, suspended by wires in a wooden frame to suggest the sound of a large group of people marching in order, will be believable if correct rhythm is supplied. If the rhythmic cadence were ignored the wooden pegs would sound like wooden pegs drummed mechanically on a wooden surface. An envelope of sound is composed of a sound's attack, sustain, and decay. Attack The way a sound is initiated is called attack. There are two types of attack: Fast attack The closer the attack of a sound (A) is to the peak (B) of a sound, the faster its attack is. Sounds that have a fast attack are.. Sounds that have a slow attack take longer to build to the sustain level. Sounds that have a slow attack are…

Once a sound has reached its peak, the length of time that the sound will sustain is dependent upon the energy from the source vibrations. When the source sound stops, the sound will began to decay. Manipulating the sustain time of a sound is yet another way of either modifying a sound or create a totally new one. Suppose you got a the sound of an elevator starting, running, and stopping, all within 25 seconds, but the script calls for a scene to be played in an elevator that runs for 60 seconds. Edit the sustain portion - make a it into a loop. (If you got a computer just copy and past) Take care to avoid sudden burst of level changes. The decrease in amplitude when a vibrating force has been removed is calleddecay. The actual time it takes for a sound to diminish to silence is the decay time. How gradual this sound decays is its rate of decay. Listening to a sound tells if it is... - little decay and with very little or no reverberation - long decay with an echo - When ever you are editing a sound, you must allow enough room at the tail for fade. (This includes sound on Internet!!!) By increasing or decreasing the playback speed you can change the properties of a sound effect. Played at twice as fast as the recorded speed .. Sound of an Atomic Bomb Detonation

- dynamite explosion of a building being detonated Sustain and Decay - roar of a huge waterfall Speed - dynamite explosion and waterfall roar [1] at slow speed In utilizing the components of a sound to create other sounds, the step that must be taken is disassociating the names of the sound with the sounds themselves. Although there are millions of names for sounds, the sounds themselves all fall into certain frequency parameters that can be manipulated by the nine components of sound. Therefore, the fact that waterfall record was selected is of no consequence. One sound of waterfall is much the same as any other waterfall sound. The only thing that are interesting in as far as sound effects are concerned is the magnitude of the waterfall - its roaring power. (The actual sound source may even be a train roaring through a station.) Because this sound offer no identification other than a constant roar it can readily be adapted for other sounds. When a believable sound is matched to a picture there is never any doubt in anyone's mind that the sound is not authentic. If the sound is a shade to fast or slow, it will cause many viewers to think that "something" was not quite right. Not one viewer, not one critic, not even the scientists who actually developed the bomb complained about this odd mixture of sounds when they appeared on the TV news in the early 1950s. What everyone heard matched convincingly the picture. One aspect of this audiovisual phenomena is synchresis. 1. A record was slowed from 78 rpm to approximately 30 rpm. The basic principle behind this is to slow a sound to the point where it is basically a tone of indistinct noise. Edited excerpts p 53 -70 "What is a sound effect?" Robert L Mott: Sound effects, Radio, TV, and Film

http://classes.berklee.edu/mbierylo/mtec111_Pages/lesson7.html http://filmsound.org/articles/ninecomponents/9components.htm http://www.passmyexams.co.uk/GCSE/biology/food-and-digestion.html#4 http://www.passmyexams.co.uk/GCSE/physics/ http://www.sparknotes.com/psychology/psych101/sensation/section3.rhtml What is Sound? Sound Sound is what we experience when the ear reacts to a certain range of vibrations. These vibrations themselves can also be called sound. Acoustic instruments generally produce sound when some part of the instrument is either struck, plucked, bowed or blown into. Electronic instruments produce sound indirectly they produce variations in electrical current which are amplified and sent through a speaker. The three qualities of sound are: pitch, timbre (tone color) and loudness. Pitch Pitch is the quality of sound which makes some sounds seem "higher" or "lower" than others. Pitch is determined by the number of vibrations produced during a given time period. The vibration rate of a sound is called its frequency the higher the frequency the higher the pitch. Frequency is often measured in units called Hertz (Hz). If a sound source vibrates at 100 vibrations per second, it has a frequency of 100 Hertz (100 Hz). Mels scale would be found here. The average person can hear sound from about 20 Hz to about 20,000 Hz. The upper frequency limit will drop with age. The human ear is very adept at filling gaps. There is a body of evidence to show that if the lowest frequency partial is missing from a complex tone, the ear will attempt to fill it in. This effect is used, for instance, by church organists who simulate the effect of say a low ‘C’ for which they do not have a pipe of sufficient size by playing the ‘C’ and ‘G’ an octave above. These two pipes provide all the partials for the lower ‘C’ note except for the lowest frequency, which the ear obligingly provides. Listening to speech or music on a poor quality transistor radio that cannot reproduce low frequencies provides another example. The programme is rendered intelligible by the ear filling in the lowest partial tones. That rapid speech is intelligible suggests that the synthesis in the ear is practically instantaneous. Timbre Timbre is a French word that means "tone color". It is pronounced: tam' ber. Timbre is the quality of sound which allows us to distinguish between different sound sources producing sound at the same pitch and loudness. The vibration of sound waves is quite complex; most sounds vibrate at several frequencies simultaneously. The additional frequencies are called overtones or harmonics. The relative strength of these overtones helps determine a sound's timbre. Dull---Brilliant, Cold----Warm, Pure----Rich Loudness Loudness is the amount or level of sound (the amplitude of the sound wave) that we hear. Changes in loudness in music are called dynamics. Sound level is often measured in decibels (dB). Sound pressure level (SPL) is a decibel scale which uses the threshold of hearing as a zero reference point. Sones scale would be found here. Dynamic Range The dynamic range of an orchestra can exceed 100dB. If the softest passage is 0db, the loudest passages can exceed 100dB. In electronic equipment the lower limit is the noise level (hissing sound) and the upper limit is reached when distortion occurs. The dynamic range of electronic equipment is also called signal-to-noise ratio. |

http://sdsu-physics.org/physics201/p201chapter7.html

http://homepages.ius.edu/kforinas/S/Pitch.html

http://homepages.ius.edu/kforinas/S/Pitch.html

Sounds may be generally characterized by pitch, loudness, and quality. The perceived pitch of a sound is just the ear's response to frequency, i.e., for most practical purposes the pitch is just the frequency. The pitch perception of the humanear is understood to operate basically by the place theory, with some sharpeningmechanism necessary to explain the remarkably high resolution of human pitch perception.

|

What is the difference between loudness and pitch of sound?

Loudness is a function of the sound wave's amplitude of the sound pressure. Aour ear drums are moved by the sound pressure.The greater the amplitude, the greater the volume.

Pitch is related to its frequency. The higher the frequency, the higher the pitch.

Loudness = amplitude of the sound pressure

Softest sound

Loudest sound

Pitch = frequency

Lowest pitch

Highest pitch

Pitch is related to its frequency. The higher the frequency, the higher the pitch.

Loudness = amplitude of the sound pressure

Softest sound

Loudest sound

Pitch = frequency

Lowest pitch

Highest pitch

Pitch, Loudness and Quality of Musical Notes

- Pitch

- Loudness

- Quality (or tone)

Pitch

- Pitch is a term used to describe how high or low a note a being played by a musical instrument or sung seems to be.

- The pitch of a note depends on the frequency of the source of the sound.

- Frequency is measured in Hertz (Hz), with one vibration per second being equal to one hertz (1 Hz).

- A high frequency produces a high pitched note and a low frequency produces a low pitched note.

Loudness

- Loudness depends on the amplitude of the sound wave.

- The larger the amplitude the more energy the sound wave contains therefore the louder the sound.

Quality

- This is used to describe the quality of the waveform as it appears to the listener. Therefore the quality of a note depends upon the waveform.

- Two notes of the same pitch and loudness, played from different instruments do not sound the same because the waveforms are different and therefore differ in quality or tone.

#####################################

1. that loud and quiet sounds are produced by big or small vibrations.

2. that high or low pitched notes are produced by fast or slow vibrations.

PS1061: Sensation and Perception 2013

amplitude = distance between peak and trough of sinewave (overall size pressure changes)

frequency = number of pressure changes per unit of time (inverse of sinewave period, measured in Hz = cycles per second)

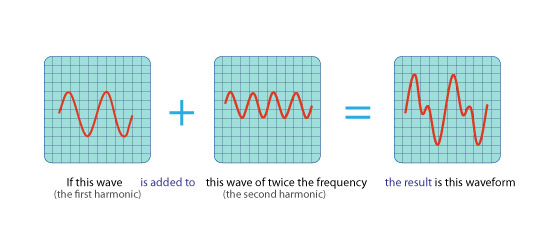

musical tones are combinations of pure tones (cf. Barlow & Mollon 1982): fundamental (determines pitch) + harmonic frequencies (determine timbre)

>> click for piano or guitar

more complex sounds can be genrated by adding further frequencies: chords, consonance, dissonance, vowels, symphonies, etc.

see also: combination tones

white noise is the superposition of many tones with random amplitude and frequency (interfering ripples on the pond) - think of the surface structure of the sea

encoding of acoustic signals requires several 'engineering tasks', which are accomplished by astonishing biological solutions:

a given frequency stimulates a particular group of haircells at a given location of the basilar membrane

tone frequency is converted to location !!

fundamental mechanisms:

systematic variation of frequency of masking tone to determine a set of detection thresholds for a given target frequency (cf. Barlow & Mollon 1982) is a key method to determine the auditory 'tuning curve' for this target frequency: threshold amplitude as function of mask frequency

the 'bandwidth' of the frequency filter that is detecting the target tone is given by the frequency differency at halfheight of threshold function

this filter tuning is the basis for the perception of pitch !

many filters cover full range of frequencies – like digital audio systems!

perceived loudness can be measured quantitatively by comparing two successively presented tones (of different frequency)

and deciding which one sounded louder (forced choice FC to find threshold for louder/softer)

by comparing tones of many frequencies we can derive curves of equal loudness

speech only covers a small region of the auditory response range: 300 - 5000 Hz, 40 - 70 dB

remember: sound intensity (SPL) is measured in decibels:

20 * log (I1/I0)

as multiples of hearing threshold I0 at 1000 Hz, in logarithmic units:

increase by a factor of 10 means additional 20dB

(for example: 20db = 10 times louder, 40db = 100 times louder, 60 db = 1000 times louder, etc.)

for more details, click here

… imagine how difficult it is to recognize the same word form different speakers, or the voice of a particular speaker ...

telephone systems cut off the upper part of the spectrum with minimal effects on speech recognition !!!

... imagine the consequences of such impairments of the audibility function for communication and social life ...

(in biology there are experts for this job, like barn owls: see Konishi 1986)

or to pick up the tune of a single instrument from a large orchestra (this is called the 'cocktail party effect')

the core of the problem of the cocktail party effect (Cherry 1953) is masking :

the detection of a tone is impaired if another tone or noise is presented at the same time

read more about how the tone is generated (and try it here)

to download a pdf copy of lecture slides, click here

back to course outline

last update 6-02-2013

Johannes M. Zanker

The higher the frequency, the higher the pitch. The lower the frequency, the lower the pitch.

1. that loud and quiet sounds are produced by big or small vibrations.

2. that high or low pitched notes are produced by fast or slow vibrations.

PS1061: Sensation and Perception 2013

Term 2, Wednesday 9-11 am (WinAud)

Lecture 5: Auditory perception: Hearing noise and sound

Course co-ordinator: Johannes M. Zanker, j.zanker@rhul.ac.uk, (Room W 2464)

Lecture Topics

- auditory perception opens another window to the world : another 'sensory channel'

- the physical nature of sound: tones, mixtures, noise, complex patterns

- basics the sensory system: peripheral filters and tonotopic cortical representation

- detection and discrimination of pure tones: characterised by frequency and intensity

- the complexity of spoken language, the use of telephones, the effects of hearing loss

- sound localisation (& the cocktail party effect) in auditory scenes

- can we generate auditory illusions ???

questions about hearing (auditory perception)

what is the significance of auditory perception for a person in real life?- sounds signal events: therefore hearing provides an alarm system

- emotional balance is influenced by sound (distress by noise, screeching, relaxation by listening to music)

- the auditory system is essential for communication - crucial for human society

- the perceptual basis of harmony (why do some things sound pleasant?)

- the influence of experience and knowledge on auditory perception

- mechanisms of separating signal sources (localising the origin of sound)

- how does the brain allow for recognition of spoken words and voices

the nature of sound

a sound source is emitting circular pressure waves (shells of air compression) |  |

| a sound source is emitting circularpressure waves (shells of air compression) | sound waves are similar to the radiating ripples on the water surface, when a pebble is tossed into a still pond |

| |

| a pure tone is represented by a sinewave (air pressure as function of space/time) which is travelling through space, with amplitude and frequency (1/period) corresponding to perceived loudness and pitch | |

amplitude = distance between peak and trough of sinewave (overall size pressure changes)

frequency = number of pressure changes per unit of time (inverse of sinewave period, measured in Hz = cycles per second)

making music: the scale

|

|

C D E F G A B C |

|

| harmonic intervals are determined by characteristic frequency ratios |

combining frequencies : pitch

frequency & amplitude of pure tones are the basis of perceived pitch andloudness |  |

what happens to the waveform when you superimpose pure tones ? |  |

>> click for piano or guitar

more complex sounds can be genrated by adding further frequencies: chords, consonance, dissonance, vowels, symphonies, etc.

see also: combination tones

the nature of noise

what happens when you superimpose random tones (i.e. produce several waves at the same time) ? |  |

acoustic sensory organ : the ear

the ear works as a transducer, converting sound waves into neural signals |

|

- outer ear: directional microphone

- middle ear: impedance matching, overload protection

- inner ear: neural encoding, frequency analysis

the sensory surface: inner ear

|  |

mechanical stimulation is transmitted through ossicles onto oval window - here the osciallations are converted to pressure waves in the cochlea - this generates atravelling wave on the basilar membrane (which resonates like the string of a guitar) |

|

from peripheral filtering to tonotopic maps

| an animation of middle and inner ear motion, <<source unknown>> |  | to see (and hear) the inner ear in action, go tohttp://www.hhmi.org/biointeractive/ neuroscience/cochlea.html |

encoding with travelling waves :

(from top to bottom: 100 Hz, 1,000Hz,10,000Hz) |  |

a given frequency stimulates a particular group of haircells at a given location of the basilar membrane

tone frequency is converted to location !!

|

after G. v. Békésy, 1967 |

fundamental mechanisms:

- tuning : preferential response of a sensor to a dedicated stimulus range (e.g. frequencies)

- filter : tuning to frequency (pitch) in the peripheral auditory system

- sensory map : cortical representation of pitch as function of location (tonotopy)

frequency channels revealed by masking

based on this observation, we can design a psychophysical masking experiment :masking: you only can hear the piccolo if the bassoon is played very softly

close mask |  distant mask |

|

|

the 'bandwidth' of the frequency filter that is detecting the target tone is given by the frequency differency at halfheight of threshold function

|  |

frequency tuning: basis of pitch perception

frequency tuning can be measured in systematic masking experiments for different target tones …many filters cover full range of frequencies – like digital audio systems!

| tuning curves resulting from frequency filter mechanisms can be measured in systematic masking experiments : |

| electrophysiological measurements from the auditory pathway (e.g. cochlear nerve of cat, sensitivity as function of frequency) generate very similar patterns of frequency tuning for individual neurones: preferred tones |

many filters cover the whole audible frequency range |  |

this filter tuning is the basis for pitch discrimination involved in auditory perception, such as recognising musical tunes!

the same principle of encoding frequency components separately is used in digital audio systems !!

the same principle of encoding frequency components separately is used in digital audio systems !!

how to measure loudness ...

the pressure of airwaves determines the magnitude of auditory sensation: 'loudness' | when sound intensity = sound pressure level (SPL) is decreased or increased relative to a reference tone, subjective ratings of loudness change proportionally |

and deciding which one sounded louder (forced choice FC to find threshold for louder/softer)

intensity of comparison tone is adjusted until it has the same ‘subjective’ loudness as the reference tone

and then the physical intensity (sound pressure level SPL) is recorded as ‘perceived loudness’

sound intensity: perception of loudness

Audiogram (or audibility function): describes the hearing performance of an individual (Berrien 1946)by comparing tones of many frequencies we can derive curves of equal loudness

|

|

remember: sound intensity (SPL) is measured in decibels:

20 * log (I1/I0)

as multiples of hearing threshold I0 at 1000 Hz, in logarithmic units:

increase by a factor of 10 means additional 20dB

(for example: 20db = 10 times louder, 40db = 100 times louder, 60 db = 1000 times louder, etc.)

the ecology of sound intensity

| threshold of hearing (at 1000 Hz) | |||

| normal breathing | |||

| leaves rustling in a breeze | |||

| empty lecture theatre | |||

| Holloway campus at night (without planes) | |||

| quiet restaurant | |||

| two-person conversation | |||

| Trafalgar Square | |||

| vacuum cleaner | |||

| huge waterfall (Niagara) | danger level | ||

| Underground train | |||

| Propeller plane at takeoff | hearing loss | ||

| Heathrow: jet at takeoff (low flying Concorde) | pain level |

for more details, click here

spectrogram

auditory events can be complicated patterns of frequency and intensity (this is called a ‘spectrum’) which are modulated as function of timeto display and analyse such events, scientists use ‘spectrograms’, or ‘sonograms’: frequency composition is shown graphically as it changes in time |  |

the complexity of a spoken word

each spoken word generates a complex pattern of frequency and intensity (spectrum), which is modulated as function of time | example; the phrase 'enjoy your weekend' is recorded as

|

the spectrum of human speech

speech sounds cover a wide range of the audible spectrum

|  |

telephone systems cut off the upper part of the spectrum with minimal effects on speech recognition !!!

hearing loss

the are many different kinds of auditory impairments, apart from complete deafness |

|

... imagine the consequences of such impairments of the audibility function for communication and social life ...

auditory space

how does (perceptual) auditory space represent events in a four-dimensional world (3 spatial dimensions + time)?the ear is a 1D sensor (a microphone samples at one point in space) - so :

in vision, we have 2D-images, can easily localise objects in the visual field and see several objects at the same timebut can you hear things at the same time in different locations?

- can we hear in two, or three dimensions?

- can the auditory system localise objects?

- can we hear several objects at the same time?

sound localization

there is no direct representation of auditory space : location needs to be calculated from a number of cues (see Goldstein 2002, chapter 11)(in biology there are experts for this job, like barn owls: see Konishi 1986)

- pinnae : crucial for sensation of space (reduced when using earphones!); used to locate elevation (up-down dimension)

- inter-aural processing (combining information from both ears) to find azimuth (left-right dimension) of sound source

- intensity differences (IID) : acoustic ‘shadow’ of the head

- temporal or phase differences (ITD): humans can detect inter-aural delays of 10 - 650 microsec (1 microsec = 1/1000,000 sec)

note the similarity between auditory stereo (this name sounds familiar - your stereo system has two speakers!) and stereovision ....

the cocktail party effect

it is easy (for young folk) to single out one particular voice from the background of a noisy pub,or to pick up the tune of a single instrument from a large orchestra (this is called the 'cocktail party effect')

how can this be achieved? |  |

the core of the problem of the cocktail party effect (Cherry 1953) is masking :

the detection of a tone is impaired if another tone or noise is presented at the same time

- masking depends on proximity in space and similarity in frequency

- binaural unmasking can be used to separate sound sources in space: if spatial distance or difference in frequency increases, separation becomes easier - these two cues are combined in the binaural information (subtract signals from the two ears to unmask separate sound sources)

- high-level effects (attention, familiarity of voice, language) & sensory fusion (we use vision to support hearing) can also be used to separate sound sources in space

auditory illusions

we can create illusions in the auditory system like in the visual system !

click here to hear the eternally rising tone

| Shepard’s (1964) eternally rising tone: |  |

summary: auditory perception

- the auditory ‘channel’ is an important source of sensory information

- the ear is a highly sensitive and intelligent device to pick up and convert sound pressure waves

- frequency filtering is the basis of perceiving pitch

- sound intensity is the second characteristic property of sound (with high ecological significance!)

- spoken words generate complex patterns of frequency and intensity (spectrogram)

- speech covers a wide range of the audible spectrum, which can be affected by hearing loss

- sound can be localized by calculating intensity and phase differences between the two ears

- auditory localization works in difficult environments (cocktail party effect)

General Reading:

- Zanker, J. M. (2010) Sensation, Perception, Action – an evolutionary perspective. Palgrave, chapters 7 and 8

- Goldstein, E.B. (2007) Sensation and Perception (7th ed.) Wadsworth-Thompson (152.1 GOL) chapters 11-13 for various aspects of hearing

Specific References:

- Békésy, G. v., 1967, 'Sensory Inhibition', Princeton University Press, Princeton NJ

- Berrien KF, 1946, ‘The effects of noise’ Psychol. Bull. 43, 141-161

- Cherry EC, 1953, ‘Some experiments on the recognition of speech, with one and two ears’ Journal of the Acoustic Society of America, 25:975-979; click here (download from Moodle)

- Evans EF, 1982, 'Functions of the auditory system' in: Barlow, H & Mollon, J., eds. ‘The Senses’, Cambridge University Press, 1982 (612.8 BAR) chapter 15, p 307-331, click here (download from Moodle)

- Gulick WL, Gescheider GA, Frisina RD, 1989 ‘Hearing’ Oxford University Press, New York

- Kolb,B & Wishaw, IQ (2001) An Introduction to Brain and Behaviour. New York: Worth Publ. (612.82 KOL)

- Konishi M, 1986 "Centrally synthesized maps of sensory space" Trends in Neuroscience 4/86 163-168; click here (download from Moodle)

- Pierce, J.R., 1983, 'The Science of Musical Sound', Freeman, New York ( VWBB Pie)

- Shepard, R. N. (1964). "Circularity in judgements of relative pitch," J. Acoust. Soc. Am.; p 2346-2353, click here (download from Moodle)

to download a pdf copy of lecture slides, click here

back to course outline

last update 6-02-2013

Johannes M. Zanker

The higher the frequency, the higher the pitch. The lower the frequency, the lower the pitch.

frequency is the characteristics of a wave length, and pitch is the result that we hear depending if it has a high or low frequency.

###############################################################Pitch, loudness and timbre

Frequency and pitch, amplitude, intensity and loudness, envelope, spectrum and timbre: how are they related? This support page to the multimedia chapter Sound is the first in a series giving more details on these sometimes subtle relationships.

- Producing, analysing and displaying sound

- Frequency and pitch

- Amplitude, intensity and loudness

- Envelope, spectrum and timbre

- Relations between physical and perceptual quantities

|

Many of our sound samples have been generated by computer, as shown below. An explicit equation for the desired sound wave (amplitude as a function of time) is evaluated and converted by the computer’s sound card to voltage as a function of time, V(t). This is input to an oscilloscope, which converts V(t) to a y(x) display.

|

| When preparing these examples, these are also output to a power amplifier and loudspeaker, as shown here. When you use them, you will download the numerical signal and this time your computer’s sound card will convert it to V(t), amplify it and output it to headphones or speakers. This and subsequent sections will probably work rather better with headphones than speakers, especially tiny computer speakers. |

Frequency and pitch |

| In this example, the signal is a sine wave. Its frequency is initially 440 Hz. It then increases over time to 880 Hz and maintains that frequency. The amplitude remains constant throughout. If you are using computer speakers, you should turn the volume down considerably to avoid distortion (and possible damage to the speakers). Now, what did you hear? You probably heard the pitch increase considerably. You probably also noticed that the sound became louder: apart from any effects due to peculiarities in your amplifier and headphones/speakers, this is expected because human ears are rather more sensitive at 880 Hz than at 440 Hz. You may also have heard rattles and distortion due to your speakers, especially if they are the small type usually used in computers.By international agreement*, 440 Hz is the standard pitch for the musical note A4, or A above middle C. This note is used to tune orchestras, chiefly because it is an open string on violins and violas, and is an octave and three octaves respectively above open strings on the cellos and basses.) 880 Hz is the note A5, one octave above A4. In much Western music, the scales from which tunes are made have the property that the eighth note in the scale has double the frequency of the first. 'Oct' is the prefix for eight, hence octave. So increasing frequency increases pitch and a doubling of the frequency is an increase of one octave. For notes that are not too high (including these) increasing the frequency also increases the loudness, as mentioned above, and as discussed in more detail in another page. You’ll possibly also find that the timbre changes. So frequency has a big effect on pitch, but also affects loudness and timbre. * This agreement is not universal. In the past, the tuning varied both with time and geographical location. In orchestras, there has a tendency for the frequency of A to rise. Most of us agree that changing the frequency by a given proportion gives the same pitch change, no matter what is the start frequency. For more about pitch, frequency and wavelengths, go to Frequency and pitch of sound. |

Envelope, spectrum and timbre | |||||||||

| All the other perceptual properties of a sound are collected together in timbre, which is defined negatively: if two different sounds have the same pitch and loudness, then by definition they have different timbres. Here are six musical notes. The pitch is almost constant (‘almost’ because it’s hard to pick up a new, cold instrument and play it perfectly in tune) and the loudness is approximately constant, too. (What does it mean to say that a bell note, which dies away gradually, is as loud as a sustained violin note?) So these sounds all have different timbres, which is how we can tell that they come from different instruments.The top graph is the microphone signal (proportional to the sound pressure) as a function of time. Notice the substantial differences here. The orchestral bell and the guitar share the property that they reach their maximum amplitude almost immediately (when struck and plucked, respectively). No more energy is put into them, and energy is continuously lost as sound is radiated (and much more is lost due to internal losses), so the amplitude decreases with time. Of the others, the bassoon has the next fastest attack: it reaches its maximum amplitude rather quickly. We should add that, for the wind instruments and violin, the rapidity of the start depends on the details of how one plays a note. Nevertheless, the faster start of the bassoon is typical. This series shows us that the envelope is very important in determining the timbre. Another contribution to timbre comes from the spectrum, which is the distribution of amplitude (or power or intensity) as a function of frequency. This is shown in the lower graph. With the exception of the bell, the spectra all show a series of equally spaced narrow peaks, which we call harmonics, about which more later. Note that the spectra are different, particularly at high frequencies. The spectrum contributes to the timbre, but usually to a lesser extent than the envelope. | |||||||||

| This animation summarises graphically the above discussion. Roll the mouse over quantities on the left to see (very approximately) the relative magnitudes of their effects on the quantities at right. ############################################ Sound WavesWhat do they look like?    How can you describe a sound wave?You can see a picture of a sound wave on the screen of a device called an oscilloscope. Look at the diagram above. The compressions, in which particles are crowded together, appear as upward curves in the line. The rarefactions, in which particles are spread apart, appear as downward curves in the line.Three characteristics are used to describe a sound wave. These are wavelength, frequency, and amplitude.

#################################### question on sound Ch 26 Chapter Review Q & A’s Q: What is the source of all sounds? A: vibrating objects Q: How does pitch relate to frequency? A: Pitch is subjective, but it increases as frequency increases Q: What is the average frequency range of a young person’s hearing? A: 20 Hz – 20,000 Hz Q: Distinguish between infrasonic and ultrasonic sound. A: Infrasonic – below 20 Hz and ultrasonic – above 20,000 Hz Q: Distinguish between compressions and rarefactions of a sound wave A: Compressions are regions of high pressure & rarefactions are regions of low pressure Q: How are compressions and rarefactions produced? A: Compressions & rarefactions are produced by a vibrating source. Q: What evidence can you cite to support the statement: Light can travel in a vacuum. A: you can see the sun or the moon Q: Can sound travel through a vacuum? A: No, sound needs a medium to travelQ: How does air temperature affect the speed of sound? A: Sound travels faster at higher temperatures. Q: How does the speed of sound in air compare with its speed in water & in steel? A: Sound travels faster in water & faster again in steel Q: Why does sound travel faster in solids an dliquids than in gases? A: The atoms are closer together, & solids and liquids are more elastic mediums Q: Why is sound louder when a vibrating source is held to a sounding board? A: More surface is forced to vibrate and push more air. Q: Why do different objects make different sounds when dropped on a floor? A: They have different natural frequencies. Q: What does it mean to say that everything has a natural frequency of vibration? A: The natural frequency is characteristic of the object’s shape, size, & composition. Q: What is the relationship between forced vibration and resonance? A: Resonance is forced vibration at the natural frequency. Q: Why can a tuning fork or bell be set into resonance, while tissue paper cannot? A: Tissue paper has no natural frequency.Q: How is resonance produced in a vibrating object? A: By input of vibrations at a frequency that matches the natural frequency of the object Q: What does tuning in a radio station have to do with resonance? A: By input of vibrations at a frequency that matches the natural frequency of the objects Q: Is it possible for one sound wave to cancel another? Explain A: Yes, it is destructive interference Q: Why does destructive interference occur when the path lengths from two identical sources differ by half a wavelength? A: The crests of one coincide with the troughs of the other; canceling each other out. Q: How does interfernce of sound relate to beats? A: Beats are a result of periodic interference. Q: What is the beat frequency when a 494 Hz tuning fork & a 496 Hz tuning fork are sounded together? And what is the frequency of the tone heard? A: beat frequency (496 – 494) Hz = 2 Hz tone heard is half way between 495Hz Q: When watching a baseball game, we often hear the bat hitting the ball after we actually see the hit. Why? A: Light travels faster than sound Q: What 2 physics mistakes occurin a movie when you see & hear at the same time a distant explosion in outer space? A: Distant sound should get to you after you see the light. Also, sound cannot travel in a vacuum, so there would be not sounds transmitted in out space.Q: Why will marchers at the end of a long parade following a band be out of step with marchers nearer the band? A: There is a time delay for sound from a marching band near the front of a long parade to reach the marchers at the end. Q: When a sound wave propagates past a point in the air, what are the changes that occur in the pressure of air a this point? A: Air pressure increases and decreases at a rate equal to the frequency of the sound wave Q: If the handle of a tuning fork is held solidly against a table, the sound becomes louder. Why? A: More surface vibrates and more air is pushed Q: If the handle of a tuning fork is held solidly against a table, the sound becomes louder. How will this affect the length of the time the fork keeps vibrating? A: The time decreases because more sound energy is sent out so the tuning fork loses energy more rapidy. Q: What beat frequency would occur if two tuning forks ( 260 Hz & 266 Hz) are sounded together? A: 263 Hz Q: Two notes are sounding, one of which is 440 Hz. If a beat frequency of 5Hz is heard, what is the other notes’s frequency? A: Either 445 Hz or 435 Hz Q: Two sounds, one at 240 Hz and the other at 243 Hz., occur at the same time. What beat frequency do you hear? A: (243 Hz- 240 Hz) = 3HzQ: A longitudinal wave can also be called a ___ wave. A: a compression wave Q: A wave that resembles a transverse wave but occurs in mediums that are fixed at both ends is call a ___ wave. A: a standing wave Q: When a wave is incident on a medium boundary and changes its direction due to a change in the wave’s velocity, this wave behavior is called ___. A: refraction Q: When a wave encounters an obstacle in its path and spreads out (bends) around the obstacle, this wave behavior is called ___. A: diffraction Q: The ___ of a sound wave depends on how much the matter carrying the wave is compressed by each vibration. A: amplitude Q: true or false The frequency of a sound wave is the same as the frequency of the vibration that produces the sound. A: True Q: Ultrasounds are sounds found above the ___Hz mark. A: Ultrasounds are above 20,000 Hz.Q: The pitch of a train whistle appears to ___ as it approaches & ___ as it travels away from an observer. A: pitch appears to increase as it approaches and decrease as it moves away Q: The field of study that analyzes the behavior of waves is called ___. A: acoustics Q: The multiple reflections of a sound wave is called ___. A: reverberation Q: true or false The greater the amount of energy created by a wave, the larger its amplitude. A: true Q: The intensity of sound is measured in ___. A: decibels Q: What 3 things can you do to a guitar string to decrease its frequency & pitch? A: Make the string 1. longer 2. thicker 3. decrease tension of string (loosen string) Q: When a wave changes from a straight wavefront to a curved front, this is called __. A: diffraction Q: This term refers to waves of the same frequency that are either in phase or out of phase of each other. A: interference http://w3.shorecrest.org/~Lisa_Peck/Physics/syllabus/soundlight/ch26sound/ch26rev_QAs.pdf ####################### Frequency is a physical characteristic, as is Amplitude. They are absolute parameters, independent of whether a human (or animal, say) is there to experience them. Volume and Pitch are perceived characteristics, which exist only as human descriptions of their experience. as frequency increases, pitch APPEARS to increase; as amplitude increases, volume APPEARS to increase. You might say that pitch is the brain (and mind)'s built-in mechanism for "measuring" frequency, and that volume is the brain (and mind)'s mechanism for "measuring" amplitude, roughly speaking. higher frequency = higher pitch (think of a train whistle. coming towards you the waves are compressed and sound higher. away from you and the are stretched, sounding lower) higher amplitude IS higher volume. its more power.

Higher Frequency = Higher Pitch

Higher Amplitude = Higher Volume Source(s):

KS3 Science Lessons

##################################################

amplitude of a sound wave is the same thing as its loudness. Since sound is a compression wave, its loudness or amplitude would correspond to how much the wave is compressed.

A sound wave will spread out after it leaves its source, decreasing its amplitude or loudness. Pitch depends on the frequency. IE: Short wavelengts have higher frequency. The relationship between velocity, wavelength and frequency is:v=wf or velocity=wavelengt X frequency If there is some absorption in the material, the loudness of the sound will decrease as it moves through the substance. If it hits a hard surface it will bounce, (echoe).

Spherical sound waves (i.e. free field waves) will distribute uniformly unless obstructed by some other material. In some cases air itself acts as an attenuator of sound waves. Over long distances, high frequencies will be absorbed by air while lower frequencies will prevail. Also lower frequencies tend to bend around surfaces or walls, and higher frequencies get absorbed or reflected.

That is why you can hear muffled voices through walls and floors, but are unable to understand what is being said. Articulation in speech is composed mainly of higher frequencies. Ref Pitch vs Timbre http://www.passmyexams.co.uk/GCSE/physics/pitch-loudness-quality-of-musical-notes.html http://www.animations.physics.unsw.edu.au/jw/sound-pitch-loudness-timbre.htm http://hyperphysics.phy-astr.gsu.edu/hbase/sound/soucon.html http://www.fi.edu/fellows/fellow2/apr99/soundvib.html http://www.ngfl-cymru.org.uk/sound_-_loudness_and_pitch |

No comments:

Post a Comment