http://www.ocf.berkeley.edu/~anandk/neuro/poster.pdf

http://www.mindcontrol.se/?page_id=1509

http://beamsandstruts.com/bits-a-pieces/item/1026-telepathy

http://www.sheldrake.org/Articles%26Papers/papers/telepathy/pdf/experiment_tests.pdf

http://www.sheldrake.org/Research/telepathy/

Scientists to study synthetic telepathy

The research could lead to a communication system that would benefit soldiers on the battlefield and paralysis and stroke patients, according to lead researcher Michael D’Zmura, chair of the UCI Department of Cognitive Sciences. “Thanks to this generous grant we can work with experts in automatic speech recognition and in brain imaging at other universities to research a brain-computer interface with applications in military, medical and commercial settings,” D’Zmura says. The brain-computer interface would use a noninvasive brain imaging technology like electroencephalography to let people communicate thoughts to each other. For example, a soldier would “think” a message to be transmitted and a computer-based speech recognition system would decode the EEG signals. The decoded thoughts, in essence translated brain waves, are transmitted using a system that points in the direction of the intended target. “Such a system would require extensive training for anyone using it to send and receive messages,” D’Zmura says. “Initially, communication would be based on a limited set of words or phrases that are recognized by the system; it would involve more complex language and speech as the technology is developed further.” D’Zmura will collaborate with UCI cognitive science professors Ramesh Srinivasan, Gregory Hickok and Kourosh Saberi. Joining the team are researchers Richard Stern and Vijayakumar Bhagavatula from Carnegie Mellon’s Department of Electrical and Computer Engineering and David Poeppel from the University of Maryland’s Department of Linguistics. The grant comes from the U.S. Department of Defense’s Multidisciplinary University Research Initiative program, which supports research involving more than one science and engineering discipline. Its goal is to develop applications for military and commercial uses.

Read more at: http://phys.org/news137863959.html#jCp

synthetic telepathy

automatic speech recognition and in brain imaging at other universities to research a brain-computer interface with applications in military, medical and commercial settings,” D’Zmura says.

Read more at: http://phys.org/news137863959.html#jCp

Topics in Research

Brain-computer interface

Imagined speech

cognitive science

Related

EEG

Research collage

UCI cognitive science professors Ramesh Srinivasan, Gregory Hickok and Kourosh Saberi

Richard Stern and Vijayakumar Bhagavatula from Carnegie Mellon’s Department of Electrical and Computer Engineering

and David Poeppel from the University of Maryland’s Department of Linguistics.

Neural science course

http://www.ece.cmu.edu/courses/items/18698.html

he brain is among the most complex systems ever studied. Underlying the brain's ability to process sensory information and drive motor actions is a network of roughly 1011 neurons, each making 103 connections with other neurons. Modern statistical and machine learning tools are needed to interpret the plethora of neural data being collected, both for (1) furthering our understanding of how the brain works, and (2) designing biomedical devices that interface with the brain. This course will cover a range of statistical methods and their application to neural data analysis. The statistical topics include latent variable models, dynamical systems, point processes, dimensionality reduction, Bayesian inference, and spectral analysis. The neuroscience applications include neural decoding, firing rate estimation, neural system characterization, sensorimotor control, spike sorting, and field potential analysis.

Special Topics in Applies Physics: Neural Technology, Sensing, and Stimulation

Neural Technology, Sensing, and Stimulation

This course gives engineering insight into the operation of excitable cells, as well as circuitry for sensing and stimulation nerves. Initial background topics include diffusion, osmosis, drift, and mediated transport, culminating in the Nernst equation of cell potential. We will then explore models of the nerve, including electrical circuit models and the Hodgkin-Huxley mathematical model. Finally, we will explore aspects of inducing a nerve to fire artificially, and cover circuit topologies for sensing action potentials and for stimulating nerves. If time allows, we will discuss other aspects of medical device design. Students will complete a neural stimulator or sensor design project.

Prerequisites:

18-220 or equivalent, or an understanding of basic circuits, differential equations, and electricity and magnetism. Some review of circuit theory will be provided for those who need it.

New Topics in Signal Processing: Network Science: Modeling and Inference

Do you ever wonder how seeming successfully ants forage for rich sources of food, bees move a beehive to more suitable locations, flocks of birds fly in formation? How come a tree falling in Ohio causes fifty five million people in the Northeast of the US and Canada to loose their electrical power? Why the actions of a few in an once in the financial district in London impact so significantly the World financial markets? Why do critical infrastructures, e.g., cellular and mobile networks, fail in times of crisis, when they are most needed? How do bot-nets spread and compromise millions of computers in the internet? Can companies understand the viral behavior of their three million (did you say eighty million) (mobile) customers? These and others are background and motivational examples that guide us in this course whose goal is the study of relatively dumb agents that sense, process, and cooperate locally but whose collective, coordinated activity leads to the emergence of complex behaviors. Among others, the course will develop basic tools to understand: i) the modeling of these highly networked, large scale structures (e.g., colonies of agents, networks of physical systems, cyber physical systems ii) how to predict the behavior of these networked systems iii) how to derive and study the properties (e.g., convergence and performance) of distributed algorithms for inference and data assimilation. The course will develop graph representations and introduce tools from spectral graph theory, will cover the basics from queueing theory, Markov point processes, and stochastic networks to predict behaviors under several types of stress conditions and asymptotic regimens, and will explore consensus algorithms and several classes of distributed inference algorithms operating under infrastructure failures (intermittent random sensor and channel failures,) different resource constraints (e.g., power or bandwidth,) or random protocols (e.g., gossip.) The course is essentially self-contained. There will be a mix of homework, midterm exams, and projects. Students will take an active role by exploring examples of applications and applying network science concepts to fully develop the analysis of their preferred applications.

Pre-requisites: Probability theory.

http://www.uni-oldenburg.de/en/academic-research/main-areas-of-research/neurosensors/

molecular biology or image processing. With their interdisciplinary approach they investigate the processes through which the brain produces an internal image of the world on the basis of the stimuli it receives from the sensory organs, whereby the focus is on the interaction between different sensations.

Advanced Digital Signal Processing

This course will examine a number of advanced topics and applications in one-dimensional digital signal processing, with emphasis on optimal signal processing techniques. Topics will include modern spectral estimation, linear prediction, short-time Fourier analysis, adaptive filtering, plus selected topics in array processing and homomorphic signal processing, with applications in speech and music processing.

4 hrs. lec.

Prerequisites:

18-491 and 36-217

Image, Video, and Multimedia

The course is designed to explore video computing algorithms including: image and video compression, steganography, object detection and tracking, motion analysis, 3D display, augmented reality, telepresence, sound recognition, video analytics and video search. The course emphasizes experimenting with the real-world videos. The assignments consist of four projects, readings and presentations

The course studies image processing, image understanding, and video sequence analysis. Image processing deals with deterministic and stochastic image digitization, enhancement. restoration, and reconstruction. This includes image representation, image sampling, image quantization, image transforms (e.g., DFT, DCT, Karhunen-Loeve), stochastic image models (Gauss fields, Markov random fields, AR, ARMA) and histogram modeling. Image understanding covers image multiresolution, edge detection, shape analysis, texture analysis, and recognition. This includes pyramids, wavelets, 2D shape description through contour primitives, and deformable templates (e.g., 'snakes'). Video processing concentrates on motion analysis. This includes the motion estimation methods, e.g., optical flow and block-based methods, and motion segmentation. The course emphasizes experimenting with the application of algorithms to real images and video. Students are encouraged to apply the algorithms presented to problems in a variety of application areas, e.g., synthetic aperture radar images, medical images, entertainment video image, and video compression.

Section P is for Portugal students only.

Special Topics in Signal Processing: Cognitive Video

Rapidly growing mobile and online videos have enriched our digital lives. They also create great challenges to our computing and networking technologies, ranging from video indexing, analysis, retrieval, to synthesis. The course covers the state-of-the-art of video processing, video understanding, and video interaction technologies, including video annotation, eye tracking, motion capture, telepresence, event and object detection, augmented reality, video summarization, and video interface design. Students will have hands-on experience with devices such as Kinect 3D sensor, Android smartphone, eye tracker, mocap, and infrared camera. The class assignments include labs and a class project.

Pre-requisites:

18290 or instuctor approval, MATLAB or C, Calculus, and matrix computation.

Multimedia Communications: Coding, Systems, and Networking

This course introduces technologies for multimedia communications. We will address how to efficiently represent multimedia data, including video, image, and audio, and how to deliver them over a variety of networks. In the coding aspect, state-of-the-art compression technologies will be presented. Emphasis will be given to a number of standards, including H.26x, MPEG, and JPEG. In the networking aspect, special considerations for sending multimedia over ATM, wireless, and IP networks, such as error resilience and quality of service, will be discussed. The H.32x series, standards for audiovisual communication systems in various network environments, will be described. Current research results in multimedia communications will be reviewed through student seminars in the last weeks of the course.

Special Topics in Signal Processing: Registration in Bioimaging

This course will cover the fundamentals of image matching (registration) methods with applications to biomedical engineering. As the fundamental step in image data fusion, registration methods have found wide ranging applications in biomedical engineering, as well as other engineering areas, and have become a major topic in image processing research. Specific topics to be covered include manual and automatic landmark-based, intensity-based, rigid, and nonrigid registration methods. Applications to be covered include multi-modal image data fusion, artifact (motion and distortion) correction and estimation, atlas-based segmentation, and computational anatomy. Course work will include Matlab programming exercises, reading of scientific papers, and independent projects. Upon successful completion, the student will be able to develop his/ her own solution to an image processing problem that involves registration.

Prerequisites:

18-396 or permission of the instructor, working knowledge of Matlab, and some image processing experience.

This course is cross listed with 42-708 Special Topics: Registration in Bioimaging

Special Topics in Signal Processing: Design Impletmentation of Speech Recognition Systems

Voice recognition systems invoke concepts from a variety of fields including speech production, algebra, probability and statistics, information theory, linguistics, and various aspects of computer science. Voice recognition has therefore largely been viewed as an advanced science, typically meant for students and researchers who possess the requisite background and motivation.

In this course we take an alternative approach. We present voice recognition systems through the perspective of a novice. Beginning from the very simple problem of matching two strings, we present the algorithms and techniques as a series of intuitive and logical increments, until we arrive at a fully functional continuous speech recognition system.

Following the philosophy that the best way to understand a topic is to work on it, the course will be project oriented, combining formal lectures with required hands-on work. Students will be required to work on a series of projects of increasing complexity. Each project will build on the previous project, such that the incremental complexity of projects will be minimal and eminently doable. At the end of the course, merely by completing the series of projects students would have built their own fully-functional speech recognition systems.

Grading will be based on project completion and presentation.

Prerequisites: Mandatory: Linear Algebra. Basic Probability Theory. Recommended: Signal Processing. Coding Skills: This course will require significant programming form the students. Students must be able to program fluently in at least one language (C, C++, Java, Python, LISP, Matlab are all acceptable).

ML

Signal Processing is the science that deals with extraction of information from signals of various kinds. This has two distinct aspects -- characterization and categorization. Traditionally, signal characterization has been performed with mathematically-driven transforms, while categorization and classification are achieved using statistical tools.

Machine learning aims to design algorithms that learn about the state of the world directly from data.

A increasingly popular trend has been to develop and apply machine learning techniques to both aspects of signal processing, often blurring the distinction between the two.

This course discusses the use of machine learning techniques to process signals. We cover a variety of topics, from data driven approaches for characterization of signals such as audio including speech, images and video, and machine learning methods for a variety of speech and image processing problems.

Prerequisites: Linear Algebra, Basic Probability Theory, Signal Processing and Machine Learning.

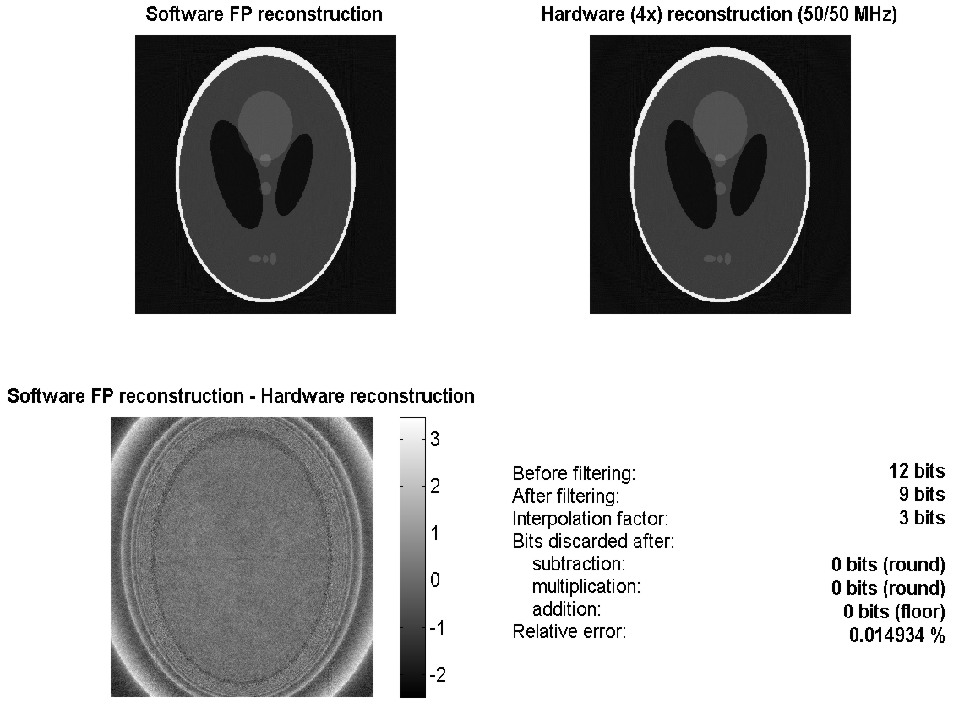

Define g(phi,s) as a 1-D projection at an angle

. g(phi,s) is the line integral of the image intensity, f(x,y), along a line l that is distance s from the origin and at angle phi off the x-axis.

We can show the Fourier Slice Theorem in the following way:

The 1D Fourier Transform of g is given by :

We can show the Fourier Slice Theorem in the following way:

The 1D Fourier Transform of g is given by :

With only one back projection, not much information about the original

image is revealed.

With only one back projection, not much information about the original

image is revealed.

With 4 back projections, we can see some of the basic features start

to emerge. The two squares on the left side start to come in, and the

main ellise looks like a diamond.

With 4 back projections, we can see some of the basic features start

to emerge. The two squares on the left side start to come in, and the

main ellise looks like a diamond.

At 8 back projections, our image is finally starting to take shape.

We can see the squares and the circles well, and we can make out the

basic shape of the main ellipse.

At 8 back projections, our image is finally starting to take shape.

We can see the squares and the circles well, and we can make out the

basic shape of the main ellipse.

With 15 back projections, we can see the bounds of the main ellipse

very well, and the squares and cirlces are well defined. The lines in

the center of the ellipse appear as blurry triangles. Also, we have a

lot of undesired residuals in the back ground (outside the main

ellipse).

With 15 back projections, we can see the bounds of the main ellipse

very well, and the squares and cirlces are well defined. The lines in

the center of the ellipse appear as blurry triangles. Also, we have a

lot of undesired residuals in the back ground (outside the main

ellipse).

At 60 back projections, our reconstructed image looks very nice. We

still have some patterns outside the ellipse, and there are streaks

from the edge of the squares all the way out to the edge of the

image. These appear because the edge of the square is such a sharp

transistion at 0 and 90 degrees, that when we pass the projections

through the ramp filter, there are sharp spikes in the filtered

projections. These never quite seem to get smoothed out.

The MATLAB code for

the filtered back projections worked very nicely. The basic algorithm

we used for filtered back projections was :

f(x,y) is the image we are trying to recontruct, q(phi,s) is the

filtered back projection at angle phi.

Initialize f(x,y)

For each p do

For each (x,y) do

Find the contributing spot in the filtered back projection

that corresponds to (x,y) at angle phi, in other words

s = xsin(phi) - ycos(phi)

f(x,y) = f(x,y) + q(phi,s);

end

end

Since we used MATLAB to do all the image processing, we were able to

vectorize the computations, and cut out the entire inner loop (which

is really 2 loops, one for x and one for y). The run times were

blazingly fast, our algorithm took about .2 seconds per back

projection on our phantom when running on a SPARC 5.

At 60 back projections, our reconstructed image looks very nice. We

still have some patterns outside the ellipse, and there are streaks

from the edge of the squares all the way out to the edge of the

image. These appear because the edge of the square is such a sharp

transistion at 0 and 90 degrees, that when we pass the projections

through the ramp filter, there are sharp spikes in the filtered

projections. These never quite seem to get smoothed out.

The MATLAB code for

the filtered back projections worked very nicely. The basic algorithm

we used for filtered back projections was :

f(x,y) is the image we are trying to recontruct, q(phi,s) is the

filtered back projection at angle phi.

Initialize f(x,y)

For each p do

For each (x,y) do

Find the contributing spot in the filtered back projection

that corresponds to (x,y) at angle phi, in other words

s = xsin(phi) - ycos(phi)

f(x,y) = f(x,y) + q(phi,s);

end

end

Since we used MATLAB to do all the image processing, we were able to

vectorize the computations, and cut out the entire inner loop (which

is really 2 loops, one for x and one for y). The run times were

blazingly fast, our algorithm took about .2 seconds per back

projection on our phantom when running on a SPARC 5.